Artificial Intelligence

Google’s open MedGemma AI models could transform healthcare

Instead of keeping their new MedGemma AI models locked behind expensive APIs, Google will hand these powerful tools to healthcare developers.

The new arrivals are called MedGemma 27B Multimodal and MedSigLIP and they’re part of Google’s growing collection of open-source healthcare AI models. What makes these special isn’t just their technical prowess, but the fact that hospitals, researchers, and developers can download them, modify them, and run them however they see fit.

Google’s AI meets real healthcare

The flagship MedGemma 27B model doesn’t just read medical text like previous versions did; it can actually “look” at medical images and understand what it’s seeing. Whether it’s chest X-rays, pathology slides, or patient records potentially spanning months or years, it can process all of this information together, much like a doctor would.

The performance figures are quite impressive. When tested on MedQA, a standard medical knowledge benchmark, the 27B text model scored 87.7%. That puts it within spitting distance of much larger, more expensive models whilst costing about a tenth as much to run. For cash-strapped healthcare systems, that’s potentially transformative.

The smaller sibling, MedGemma 4B, might be more modest in size but it’s no slouch. Despite being tiny by modern AI standards, it scored 64.4% on the same tests, making it one of the best performers in its weight class. More importantly, when US board-certified radiologists reviewed chest X-ray reports it had written, they deemed 81% accurate enough to guide actual patient care.

MedSigLIP: A featherweight powerhouse

Alongside these generative AI models, Google has released MedSigLIP. At just 400 million parameters, it’s practically featherweight compared to today’s AI giants, but it’s been specifically trained to understand medical images in ways that general-purpose models cannot.

This little powerhouse has been fed a diet of chest X-rays, tissue samples, skin condition photos, and eye scans. The result? It can spot patterns and features that matter in medical contexts whilst still handling everyday images perfectly well.

MedSigLIP creates a bridge between images and text. Show it a chest X-ray, and ask it to find similar cases in a database, and it’ll understand not just visual similarities but medical significance too.

Healthcare professionals are putting Google’s AI models to work

The proof of any AI tool lies in whether real professionals actually want to use it. Early reports suggest doctors and healthcare companies are excited about what these models can do.

DeepHealth in Massachusetts has been testing MedSigLIP for chest X-ray analysis. They’re finding it helps spot potential problems that might otherwise be missed, acting as a safety net for overworked radiologists. Meanwhile, at Chang Gung Memorial Hospital in Taiwan, researchers have discovered that MedGemma works with traditional Chinese medical texts and answers staff questions with high accuracy.

Tap Health in India has highlighted something crucial about MedGemma’s reliability. Unlike general-purpose AI that might hallucinate medical facts, MedGemma seems to understand when clinical context matters. It’s the difference between a chatbot that sounds medical and one that actually thinks medically.

Why open-sourcing the AI models is critical in healthcare

Beyond generosity, Google’s decision to make these models is also strategic. Healthcare has unique requirements that standard AI services can’t always meet. Hospitals need to know their patient data isn’t leaving their premises. Research institutions need models that won’t suddenly change behaviour without warning. Developers need the freedom to fine-tune for very specific medical tasks.

By open-sourcing the AI models, Google has addressed these concerns with healthcare deployments. A hospital can run MedGemma on their own servers, modify it for their specific needs, and trust that it’ll behave consistently over time. For medical applications where reproducibility is crucial, this stability is invaluable.

However, Google has been careful to emphasise that these models aren’t ready to replace doctors. They’re tools that require human oversight, clinical correlation, and proper validation before any real-world deployment. The outputs need checking, the recommendations need verifying, and the decisions still rest with qualified medical professionals.

This cautious approach makes sense. Even with impressive benchmark scores, medical AI can still make mistakes, particularly when dealing with unusual cases or edge scenarios. The models excel at processing information and spotting patterns, but they can’t replace the judgment, experience, and ethical responsibility that human doctors bring.

What’s exciting about this release isn’t just the immediate capabilities, but what it enables. Smaller hospitals that couldn’t afford expensive AI services can now access cutting-edge technology. Researchers in developing countries can build specialised tools for local health challenges. Medical schools can teach students using AI that actually understands medicine.

The models are designed to run on single graphics cards, with the smaller versions even adaptable for mobile devices. This accessibility opens doors for point-of-care AI applications in places where high-end computing infrastructure simply doesn’t exist.

As healthcare continues grappling with staff shortages, increasing patient loads, and the need for more efficient workflows, AI tools like Google’s MedGemma could provide some much-needed relief. Not by replacing human expertise, but by amplifying it and making it more accessible where it’s needed most.

(Photo by Owen Beard)

See also: Tencent improves testing creative AI models with new benchmark

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by here.

Artificial Intelligence

Zuckerberg outlines Meta’s AI vision for ‘personal superintelligence’

Meta CEO Mark Zuckerberg has laid out his blueprint for the future of AI, and it’s about giving you “personal superintelligence”.

In a letter, the Meta chief painted a picture of what’s coming next, and he believes it’s closer than we think. He says his teams are already seeing early signs of progress.

“Over the last few months we have begun to see glimpses of our AI systems improving themselves,” Zuckerberg wrote. “The improvement is slow for now, but undeniable. Developing superintelligence is now in sight.”

So, what does he want to do with it? Forget AI that just automates boring office work, Zuckerberg and Meta’s vision for personal superintelligence is far more intimate. He imagines a future where technology serves our individual growth, not just our productivity.

In his words, the real revolution will be “everyone having a personal superintelligence that helps you achieve your goals, create what you want to see in the world, experience any adventure, be a better friend to those you care about, and grow to become the person you aspire to be.”

But here’s where it gets interesting. He drew a clear line in the sand, contrasting his vision against a very different, almost dystopian alternative that he believes others are pursuing.

“This is distinct from others in the industry who believe superintelligence should be directed centrally towards automating all valuable work, and then humanity will live on a dole of its output,” he stated.

Meta, Zuckerberg says, is betting on the individual when it comes to AI superintelligence. The company believes that progress has always come from people chasing their own dreams, not from living off the scraps of a hyper-efficient machine.

If he’s right, we’ll spend less time wrestling with software and more time creating and connecting. This personal AI would live in devices like smart glasses, understanding our world because they can “see what we see, hear what we hear.”

Of course, he knows this is powerful, even dangerous, stuff. Zuckerberg admits that superintelligence will bring new safety concerns and that Meta will have to be careful about what they release to the world. Still, he argues that the goal must be to empower people as much as possible.

Zuckerberg believes we’re at a crossroads right now. The choices we make in the next few years will decide everything.

“The rest of this decade seems likely to be the decisive period for determining the path this technology will take,” he warned, framing it as a choice between “personal empowerment or a force focused on replacing large swaths of society.”

Zuckerberg has made his choice. He’s focusing Meta’s enormous resources on building this personal superintelligence future.

See also: Forget the Turing Test, AI’s real challenge is communication

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Artificial Intelligence

Google’s Veo 3 AI video creation tools are now widely available

Google has made its most powerful AI video creator, Veo 3, available for everyone to use on its Vertex AI platform. And for those who need to work quickly, a speedier version called Veo 3 Fast is also ready-to-go for quick creative work.

Ever had a brilliant idea for a video but found yourself held back by the cost, time, or technical skills needed to create it? This tool aims to offer a faster way to turn your text ideas into everything from short films to product demos.

70 million videos have been created since May, showing a huge global appetite for these AI video creation tools. Businesses are diving in as well, generating over 6 million videos since they got early access in June.

The real-world applications for Veo 3

So, what does this look like in the real world? From global design platforms to major advertising agencies, companies are already putting Veo 3 to work. Take design platform Canva, they are building Veo directly into their software to make video creation simple for their users.

Cameron Adams, Co-Founder and Chief Product Officer at Canva, said: “Enabling anyone to bring their ideas to life – especially their most creative ones – has been core to Canva’s mission ever since we set out to empower the world to design.

“By democratising access to a powerful technology like Google’s Veo 3 inside Canva AI, your big ideas can now be brought to life in the highest quality video and sound, all from within your existing Canva subscription. In true Canva fashion, we’ve built this with an intuitive interface and simple editing tools in place, all backed by Canva Shield.”

For creative agencies like BarkleyOKRP, the big wins are speed and quality. They claim to have been so impressed with the latest version that they went back and remade videos.

Julie Ray Barr, Senior Vice President Client Experience at BarkleyOKRP, commented: “The rapid advancements from Veo 2 to Veo 3 within such a short time frame on this project have been nothing short of remarkable.

“Our team undertook the task of re-creating numerous music videos initially produced with Veo 2 once Veo 3 was released, primarily due to the significantly improved synchronization between voice and mouth movements. The continuous daily progress we are witnessing is truly extraordinary.”

It’s even changing how global companies connect with local customers. The investing platform eToro used Veo 3 to create 15 different, fully AI-generated versions of a single advertisement, each customised to a specific country with its own native language.

Shay Chikotay, Head of Creative & Content at eToro, said: “With Veo 3, we produced 15 fully AI‑generated versions of our ad, each in the native language of its market, all while capturing real emotion at scale.

“Ironically, AI didn’t reduce humanity; it amplified it. Veo 3 lets us tell more stories, in more tongues, with more impact.”

Google gives creators a powerful AI video creation tool

Veo 3 and Veo 3 Fast are packed with features designed to give you the control to tell complete stories.

- Create scenes with sound. The AI generates video and audio at the same time, so you can have characters that speak with accurate lip-syncing and sound effects that fit the scene.

- High quality results. The models produce video in high-definition (1080p), making it good enough for professional marketing campaigns and demos.

- Reach a global audience easily. Veo 3’s ability to generate dialogue natively makes it much simpler to produce a video once and then translate the dialogue for many different languages.

- Bring still images to life. A new feature, coming in August, will let you take a single photo, add a text prompt, and watch as Veo animates it into an 8-second video clip.

Of course, with such powerful technology, safety is a key concern. Google has built Veo 3 for responsible enterprise use. Every video frame is embedded with an invisible digital watermark from SynthID to help combat misinformation. The service is also covered by Google’s indemnity for generative AI, giving businesses that extra layer of security.

See also: Google’s newest Gemini 2.5 model aims for ‘intelligence per dollar’

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Artificial Intelligence

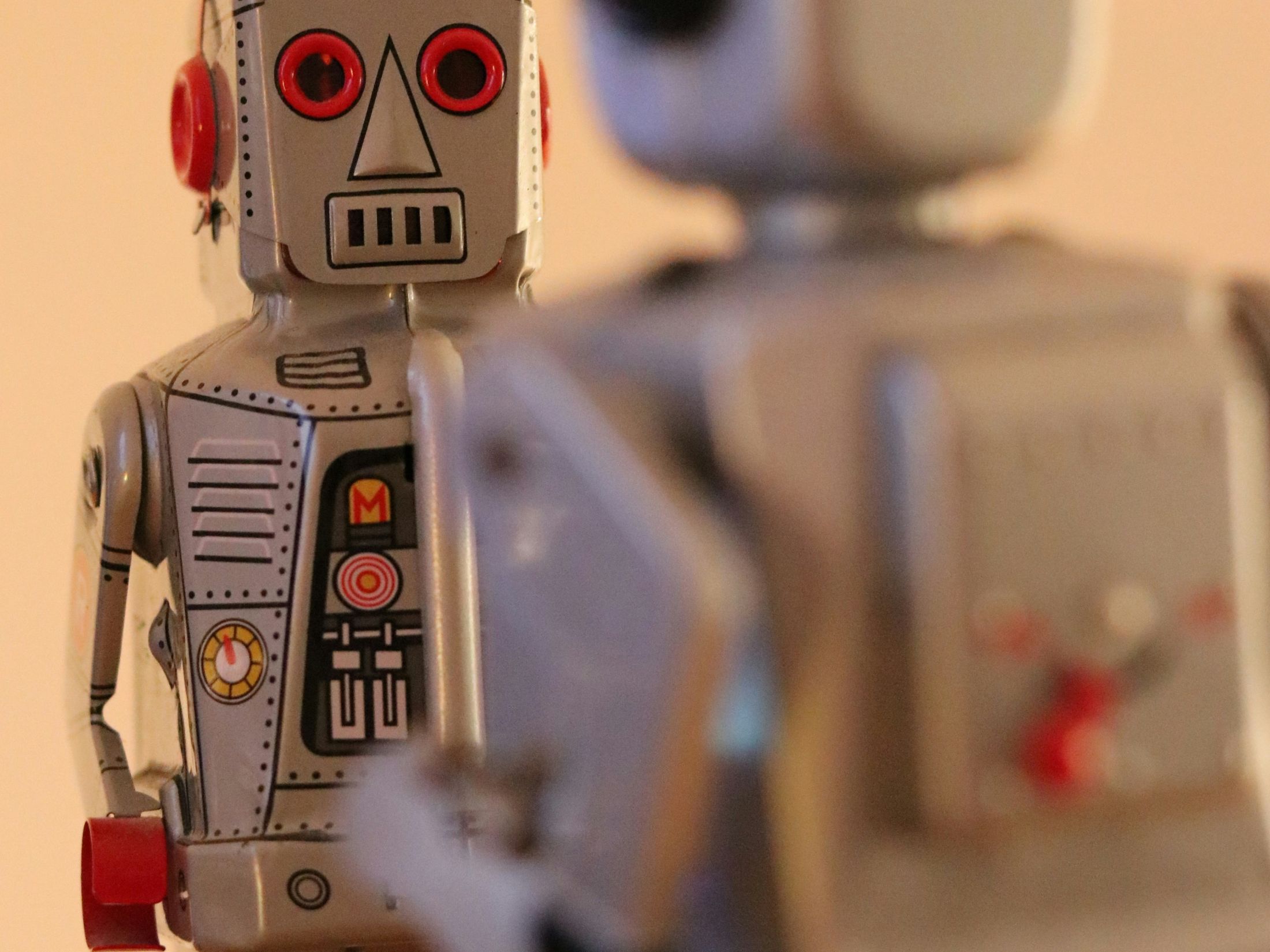

Forget the Turing Test, AI’s real challenge is communication

While the development of increasingly powerful AI models grabs headlines, the big challenge is getting intelligent agents to communicate.

Right now, we have all these capable systems, but they’re all speaking different languages. It’s a digital Tower of Babel, and it’s holding back the true potential of what AI can achieve.

To move forward, we need a common tongue; a universal translator that will allow these different systems to connect and collaborate. Several contenders have stepped up to the plate, each with their own ideas about how to solve this communication puzzle.

Anthropic’s Model Context Protocol, or MCP, is one of the big names in the ring. It attempts to create a secure and organised way for AI models to use external tools and data. MCP has become popular because it’s relatively simple and has the backing of a major AI player. However, it’s really designed for a single AI to use different tools, not for a team of AIs to work together.

And that’s where other protocols like the Agent Communication Protocol (ACP) and the Agent-to-Agent Protocol (A2A) come in.

ACP, an open-source project from IBM, is all about enabling AI agents to communicate as peers. It’s built on familiar web technologies that developers are already comfortable with, which makes it easy to adopt. It’s a flexible and powerful solution that allows for a more decentralised and collaborative approach to AI.

Google’s A2A protocol, meanwhile, takes a slightly different tack. It’s designed to work alongside MCP, rather than replace it. A2A is focused on how a team of AIs can work together on complex tasks, passing information and responsibilities back and forth. It uses a system of ‘Agent Cards,’ like digital business cards, to help AIs find and understand each other.

The real difference between these protocols is their vision for the future of how AI agents communicate. MCP is for a world where a single, powerful AI is at the centre, using a variety of tools to get things done. ACP and A2A are designed for distributed intelligence, where teams of specialised AIs work together to solve problems.

A universal language for AI would open the door to a whole new world of possibilities. Imagine a team of AIs working together to design a new product, with one agent handling the market research, another the design, and a third the manufacturing process. Or a network of medical AIs collaborating to analyse patient data and develop personalised treatment plans.

But we’re not there yet. The “protocol wars” are in full swing, and there’s a real risk that we could end up with even more fragmentation than we have now.

It’s likely that the future of how AI communicates won’t be a one-size-fits-all solution. We may see different protocols, each used for what it does best. One thing is for sure: figuring out how to get AIs to talk to each other is among the next great challenges in the field.

(Photo by Theodore Poncet)

See also: Anthropic deploys AI agents to audit models for safety

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Fintech1 week ago

Fintech1 week agoOpenAI and UK Government Partner on AI Infrastructure and Deployment

Latest Tech News2 weeks ago

Latest Tech News2 weeks agoThe tech that the US Post Office gave us

Cyber Security1 week ago

Cyber Security1 week agoMicrosoft Fix Targets Attacks on SharePoint Zero-Day – Krebs on Security

Latest Tech News1 week ago

Latest Tech News1 week agoTrump wanted to break up Nvidia — but then its CEO won him over

Artificial Intelligence2 weeks ago

Artificial Intelligence2 weeks agoApple loses key AI leader to Meta

Latest Tech News5 days ago

Latest Tech News5 days agoGPD’s monster Strix Halo handheld requires a battery ‘backpack’ or a 180W charger

Cyber Security6 days ago

Cyber Security6 days agoPhishers Target Aviation Execs to Scam Customers – Krebs on Security

Artificial Intelligence1 week ago

Artificial Intelligence1 week agoWhy Apple is playing it slow with AI