Artificial Intelligence

Why security chiefs demand urgent regulation of AI like DeepSeek

Anxiety is growing among Chief Information Security Officers (CISOs) in security operation centres, particularly around Chinese AI giant DeepSeek.

AI was heralded as a new dawn for business efficiency and innovation, but for the people on the front lines of corporate defence, it’s casting some very long and dark shadows.

Four in five (81%) UK CISOs believe the Chinese AI chatbot requires urgent regulation from the government. They fear that without swift intervention, the tool could become the catalyst for a full-scale national cyber crisis.

This isn’t speculative unease; it’s a direct response to a technology whose data handling practices and potential for misuse are raising alarm bells at the highest levels of enterprise security.

The findings, commissioned by Absolute Security for its UK Resilience Risk Index Report, are based on a poll of 250 CISOs at large UK organisations. The data suggests that the theoretical threat of AI has now landed firmly on the CISO’s desk, and their reactions have been decisive.

In what would have been almost unthinkable a couple of years ago, over a third (34%) of these security leaders have already implemented outright bans on AI tools due to cybersecurity concerns. A similar number, 30 percent, have already pulled the plug on specific AI deployments within their organisations.

This retreat is not a sign of Luddism but a pragmatic response to an escalating problem. Businesses are already facing complex and hostile threats, as evidenced by high-profile incidents like the recent Harrods breach. CISOs are struggling to keep pace, and the addition of sophisticated AI tools into the attacker’s arsenal is a challenge many feel ill-equipped to handle.

A growing security readiness gap for AI platforms like DeepSeek

The core of the issue with platforms like DeepSeek lies in their potential to expose sensitive corporate data and be weaponised by cybercriminals.

Three out of five (60%) CISOs predict a direct increase in cyberattacks as a result of DeepSeek’s proliferation. An identical proportion reports that the technology is already tangling their privacy and governance frameworks, making an already difficult job almost impossible.

This has prompted a shift in perspective. Once viewed as a potential silver bullet for cybersecurity, AI is now seen by a growing number of professionals as part of the problem. The survey reveals that 42 percent of CISOs now consider AI to be a bigger threat than a help to their defensive efforts.

Andy Ward, SVP International of Absolute Security, said: “Our research highlights the significant risks posed by emerging AI tools like DeepSeek, which are rapidly reshaping the cyber threat landscape.

“As concerns grow over their potential to accelerate attacks and compromise sensitive data, organisations must act now to strengthen their cyber resilience and adapt security frameworks to keep pace with these AI-driven threats.

“That’s why four in five UK CISOs are urgently calling for government regulation. They’ve witnessed how quickly this technology is advancing and how easily it can outpace existing cybersecurity defences.”

Perhaps most worrying is the admission of unpreparedness. Almost half (46%) of the senior security leaders confess that their teams are not ready to manage the unique threats posed by AI-driven attacks. They are witnessing the development of tools like DeepSeek outpacing their defensive capabilities in real-time, creating a dangerous vulnerability gap that many believe can only be closed by national-level government intervention.

“These are not hypothetical risks,” Ward continued. “The fact that organisations are already banning AI tools outright and rethinking their security strategies in response to the risks posed by LLMs like DeepSeek demonstrates the urgency of the situation.

“Without a national regulatory framework – one that sets clear guidelines for how these tools are deployed, governed, and monitored – we risk widespread disruption across every sector of the UK economy.”

Businesses are investing to avert crisis with their AI adoption

Despite this defensive posture, businesses are not planning a full retreat from AI. The response is more of a strategic pause rather than a permanent stop.

Businesses recognise the immense potential of AI and are actively investing to adopt it safely. In fact, 84 percent of organisations are making the hiring of AI specialists a priority for 2025.

This investment extends to the very top of the corporate ladder. 80 percent of companies have committed to AI training at the C-suite level. The strategy appears to be a dual-pronged approach: upskill the workforce to understand and manage the technology, and bring in the specialised talent needed to navigate its complexities.

The hope – and it is a hope, if not a prayer – is that building a strong internal foundation of AI expertise can act as a counterbalance to the escalating external threats.

The message from the UK’s security leadership is clear: they do not want to block AI innovation, but to enable it to proceed safely. To do that, they require a stronger partnership with the government.

The path forward involves establishing clear rules of engagement, government oversight, a pipeline of skilled AI professionals, and a coherent national strategy for managing the potential security risks posed by DeepSeek and the next generation of powerful AI tools that will inevitably follow.

“The time for debate is over. We need immediate action, policy, and oversight to ensure AI remains a force for progress, not a catalyst for crisis,” Ward concludes.

See also: Alan Turing Institute: Humanities are key to the future of AI

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Artificial Intelligence

Tencent Hunyuan Video-Foley brings lifelike audio to AI video

A team at Tencent’s Hunyuan lab has created a new AI, ‘Hunyuan Video-Foley,’ that finally brings lifelike audio to generated video. It’s designed to listen to videos and generate a high-quality soundtrack that’s perfectly in sync with the action on screen.

Ever watched an AI-generated video and felt like something was missing? The visuals might be stunning, but they often have an eerie silence that breaks the spell. In the film industry, the sound that fills that silence – the rustle of leaves, the clap of thunder, the clink of a glass – is called Foley art, and it’s a painstaking craft performed by experts.

Matching that level of detail is a huge challenge for AI. For years, automated systems have struggled to create believable sounds for videos.

How is Tencent solving the AI-generated audio for video problem?

One of the biggest reasons video-to-audio (V2A) models often fell short in the sound department was what the researchers call “modality imbalance”. Essentially, the AI was listening more to the text prompts it was given than it was watching the actual video.

For instance, if you gave a model a video of a busy beach with people walking and seagulls flying, but the text prompt only said “the sound of ocean waves,” you’d likely just get the sound of waves. The AI would completely ignore the footsteps in the sand and the calls of the birds, making the scene feel lifeless.

On top of that, the quality of the audio was often subpar, and there simply wasn’t enough high-quality video with sound to train the models effectively.

Tencent’s Hunyuan team tackled these problems from three different angles:

- Tencent realised the AI needed a better education, so they built a massive, 100,000-hour library of video, audio, and text descriptions for it to learn from. They created an automated pipeline that filtered out low-quality content from the internet, getting rid of clips with long silences or compressed, fuzzy audio, ensuring the AI learned from the best possible material.

- They designed a smarter architecture for the AI. Think of it like teaching the model to properly multitask. The system first pays incredibly close attention to the visual-audio link to get the timing just right—like matching the thump of a footstep to the exact moment a shoe hits the pavement. Once it has that timing locked down, it then incorporates the text prompt to understand the overall mood and context of the scene. This dual approach ensures the specific details of the video are never overlooked.

- To guarantee the sound was high-quality, they used a training strategy called Representation Alignment (REPA). This is like having an expert audio engineer constantly looking over the AI’s shoulder during its training. It compares the AI’s work to features from a pre-trained, professional-grade audio model to guide it towards producing cleaner, richer, and more stable sound.

The results speak sound for themselves

When Tencent tested Hunyuan Video-Foley against other leading AI models, the audio results were clear. It wasn’t just that the computer-based metrics were better; human listeners consistently rated its output as higher quality, better matched to the video, and more accurately timed.

Across the board, the AI delivered improvements in making the sound match the on-screen action, both in terms of content and timing. The results across multiple evaluation datasets support this:

Tencent’s work helps to close the gap between silent AI videos and an immersive viewing experience with quality audio. It’s bringing the magic of Foley art to the world of automated content creation, which could be a powerful capability for filmmakers, animators, and creators everywhere.

See also: Google Vids gets AI avatars and image-to-video tools

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is part of TechEx and is co-located with other leading technology events, click here for more information.

AI News is powered by TechForge Media. Explore other upcoming enterprise technology events and webinars here.

Artificial Intelligence

Agentic AI: Promise, scepticism, and its meaning for Southeast Asia

Agentic AI is being talked about as the next major wave of artificial intelligence, but its meaning for enterprises remains to be settled. Capgemini Research Institute estimates agentic AI could unlock as much as US$450 billion in economic value by 2028. Yet adoption is still limited: only 2% of organisations have scaled its use, and trust in AI agents is already starting to slip.

That tension – high potential but low deployment – is what Capgemini’s new research explores. Based on an April 2025 survey of 1,500 executives at large organisations in 14 countries, including Singapore, the report highlights trust and oversight as important factors in realising value. Nearly three-quarters of executives said the benefits of human involvement in AI workflows outweigh the costs. Nine out of ten described oversight as either positive or at least cost-neutral.

The message is clear: AI agents work best when paired with people, not left on autopilot.

Early steps, slow progress

Roughly a quarter have launched agentic AI pilots, while only 14% have moved into implementation. For the majority, deployment is still in the planning stage. The report describes this as a widening gap between intent and readiness, now one of the main barriers to capturing economic value.

The technology is not just theoretical – real-world applications are starting to emerge, and one example is a personal shopping assistant that can search for items based on specific requests, generate product descriptions, answer questions, and place items in a cart using voice or text commands. While these tools typically stop short of completing financial transactions for security reasons, they already replicate many of the functions of a human assistant.

This raises bigger questions about the role of traditional websites. If AI can handle tasks like searching, comparing, and preparing purchases, will people still need to navigate online stores directly? For those who find busy websites overwhelming or difficult to navigate, an AI-driven interface may offer a simpler, more accessible option.

Defining agentic AI

To cut through the hype, AI News spoke with Jason Hardy, chief technology officer for artificial intelligence at Hitachi Vantara, about how enterprises in Asia-Pacific should think about the technology.

“Agentic AI is software that can decide, act, and refine its strategy on its own,” Hardy said. “Think of it as a team of domain experts that can learn from experience, coordinate tasks, and operate in real time. Generative AI creates content and is usually reactive to prompts. Agentic AI may use GenAI inside it, but its job is to pursue objectives and take action in dynamic environments.”

The distinction – between producing outputs and driving outcomes – captures the meaning of agentic AI for enterprise IT.

Why adoption is accelerating

According to Hardy, adoption is being driven by scale and complexity. “Enterprises are drowning in complexity, risk, and scale. Agentic AI is catching on because it does more than analyse. It optimises storage and capacity on the fly, automates governance and compliance, anticipates failures before they occur, and responds to security threats in real time. That shift from ‘insight’ to ‘autonomous action’ is why adoption is accelerating,” he explained.

Capgemini’s research supports this. The study found that while confidence in agentic AI is uneven, early deployments are proving useful when the technology takes on routine but essential IT tasks.

Where value is emerging

Hardy pointed to IT operations as the strongest use case so far. “Automated data classification, proactive storage optimisation, and compliance reporting save teams hours each day, while predictive maintenance and real-time cybersecurity responses reduce downtime and risk,” he said.

The impact goes beyond efficiency. The capabilities mean systems can detect problems before they escalate, allocate resources more effectively, and contain security incidents more quickly. “Early users are already using agentic AI to remediate incidents proactively before they escalate, strengthening reliability and performance in hybrid environments,” Hardy added.

For now, IT remains the most practical starting point: its deployment offers measurable results and is central to how enterprises manage both costs and risk, showing the meaning of agentic AI in operations.

Southeast Asia’s starting point

For Southeast Asian organisations, Hardy said the first priority is getting the data right. “Agentic AI delivers value only when enterprise data is properly classified, secured, and governed,” he explained.

Infrastructure also matters, meaning that agentic AI requires systems that can support multi-agent orchestration, persistent memory, and dynamic resource allocation. Without this foundation, adoption will be limited in scope.

Many enterprises may choose to begin with IT operations, where agentic AI can pre-empt outages and optimise performance before rolling out to wider business functions.

Reshaping core workflows

Hardy expects agentic AI to reshape workflows in IT, supply chain management, and customer service. “In IT operations, agentic AI can anticipate capacity needs, rebalance workloads, and reallocate resources in real time. It can also automate predictive maintenance, preventing hardware failures before they occur,” he said.

Cybersecurity is another area of promise. “In cybersecurity, agentic AI is able to detect anomalies, isolate affected systems, and trigger immutable backups in seconds, reducing response times and mitigating potential damage,” Hardy noted.

The capabilities are not limited to proof-of-concept trials. Early deployments already show how agentic AI can strengthen reliability and resilience in hybrid environments.

Skills and leadership

Adoption will also require new human skills. “Agentic AI will shift the human role from execution to oversight and orchestration,” Hardy said. Leaders will need to set boundaries and monitor autonomous systems, ensuring they stay in ethical and organisational limits.

For managers, the change means less focus on administrative tasks and more on mentoring, innovation, and strategy. HR teams will need to build governance skills like auditing readiness and create new structures for integrating agentic AI effectively.

The workforce impact will be uneven. The World Economic Forum predicts that AI could create 11 million jobs in Southeast Asia by 2030 and displace nine million. Women and Gen Z are expected to face the sharpest disruptions, with more than 70% of women and up to 76% of younger workers in roles vulnerable to AI.

This highlights the urgency of reskilling, and major investments are already underway, with Microsoft committing $1.7 billion in Indonesia and rolling out training programmes in Malaysia and the wider region. Hardy stressed that capacity building must be inclusive, rapid, and strategic.

What comes next

Looking three years ahead, Hardy believes many leaders will underestimate the pace of change. “The first wave of benefits is already visible in IT operations: agentic AI is automating tasks like data classification, storage optimisation, predictive maintenance, and cybersecurity response, freeing teams to focus on higher-level strategic work,” he said.

But the larger surprise may be at the economic and business model level. IDC projects AI and generative AI could add around US$120 billion to the GDP of the ASEAN-6 by 2027. Hardy sees the implications as broader and faster than many expect. “The suggests the impact will be much faster and more material than many leaders currently anticipate,” he said.

In Indonesia, more than 57% of job roles are expected to be augmented or disrupted by AI, a reminder that transformation will not be limited to IT. It will cut in how businesses are structured, how they manage risk, and how they create value.

Balancing autonomy with oversight

The Capgemini findings and Hardy’s insights converge on the same theme: agentic AI holds huge promise, but its meaning in practice depends on balancing autonomy with trust and human oversight.

The technology may help enterprises lower costs, improve reliability, and unlock new revenue streams. But without a focus on governance, reskilling, and infrastructure readiness, adoption risks stalling.

For Southeast Asia, the question is not whether agentic AI will take hold, but how quickly – and whether enterprises can balance autonomy with accountability as machines begin to take on more responsibility for business decisions.

(Photo by Igor Omilaev)

See also: Beyond acceleration: the rise of agentic AI

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is part of TechEx and is co-located with other leading technology events, click here for more information.

AI News is powered by TechForge Media. Explore other upcoming enterprise technology events and webinars here.

Artificial Intelligence

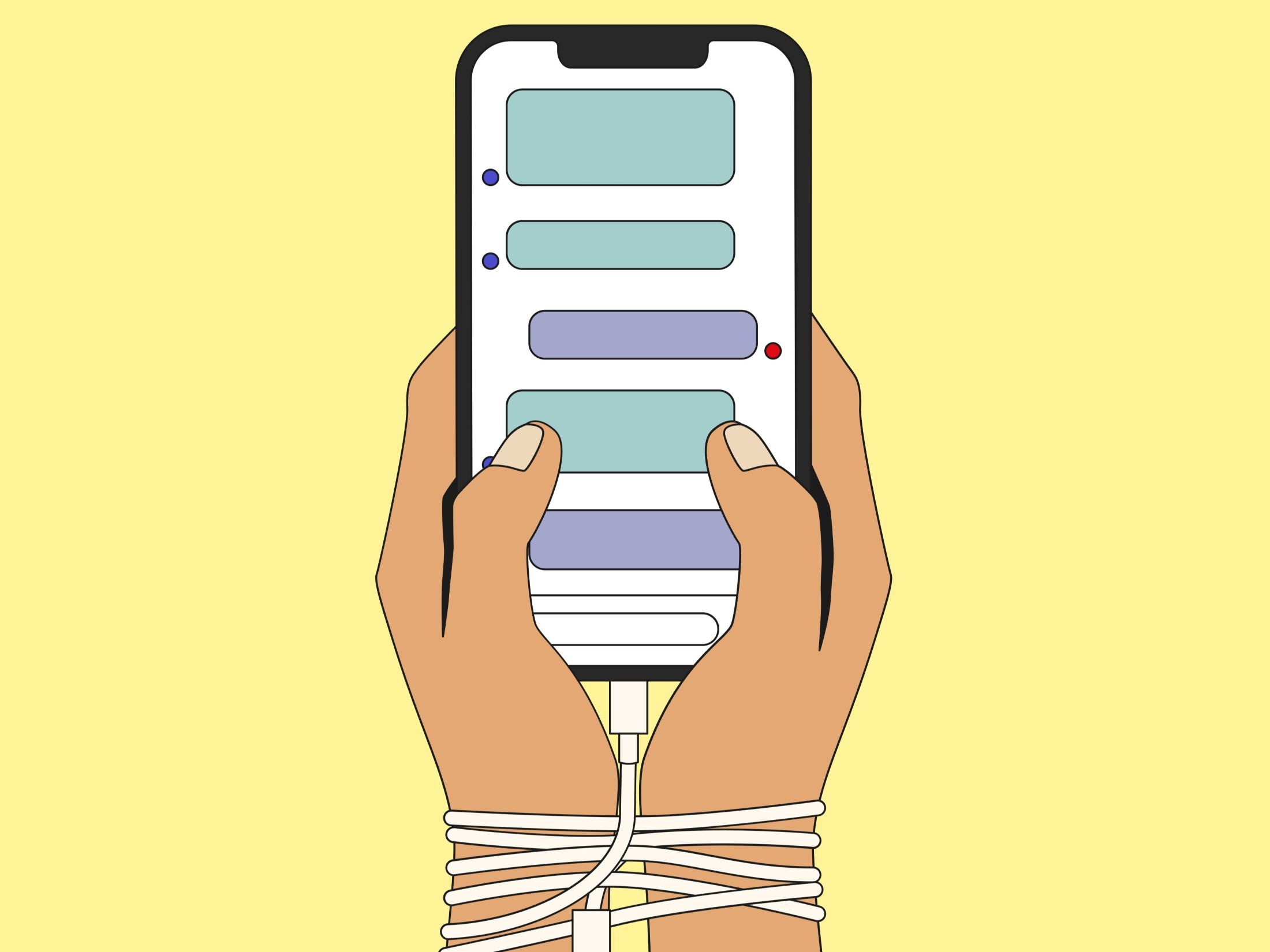

Marketing AI boom faces crisis of consumer trust

The vast majority (92%) of marketing professionals are using AI in their day-to-day operations, turning it from a buzzword into a workhorse.

According to SAP Emarsys – which took the pulse of over 10,000 consumers and 1,250 marketers – while businesses are seeing real benefits from AI, shoppers are becoming increasingly distrustful, especially when it comes to their personal data. This divide could easily unravel the personalised shopping experience that brands are working so hard to build.

The rush to bring AI into marketing has been fast and decisive. As Sara Richter, CMO at SAP Emarsys, puts it, “AI marketing is now fully in motion: it has transitioned from the theoretical to the practical as marketers welcome AI into their strategies and test possibilities.”

For businesses, the appeal is obvious. 71 percent of marketers say AI helps them launch campaigns faster, saving them over two hours on average for each one. This newfound efficiency is doing what we often hear AI is best at: freeing up teams from repetitive work. 72 percent report they can now focus on more creative and strategic tasks.

The results are hitting the bottom line, too. 60 percent of marketers have seen customer engagement climb, and 58 percent report a boost in customer loyalty since bringing AI on board.

But shoppers are telling a different story. The report reveals a “personalisation gap,” where the efforts of marketers just aren’t hitting the mark. Even with heavy investment in AI-driven tailoring, 40 percent of consumers feel that brands just don’t get them as people—a huge jump from 25 percent last year. To make matters worse, 60 percent say the marketing emails they receive are mostly irrelevant.

Dig deeper, and you find a real crisis of confidence in how personal data is being handled for AI marketing. 63 percent of consumers globally don’t trust AI with their data, up from 44 percent in 2024. In the UK, it’s even more stark, with 76 percent of shoppers feeling uneasy.

This collapse in trust is happening just as new rules come into play. A year after the EU’s AI Act was introduced, more than a third (37%) of UK marketers have overhauled their approach to AI, with 44% stating their use of the technology has become more ethical.

This creates a tension that the whole industry is talking about: how to be responsible without killing innovation. While the AI Act provides a clearer rulebook, over a quarter (28%) of marketing professionals are worried that rigid regulations could stifle creativity.

As Dr Stefan Wenzell, Chief Product Officer at SAP Emarsys, says, “regulation must strike a balance – protecting consumers without slowing innovation. At SAP Emarsys, we believe responsible AI is about building trust through clarity, relevance, and smart data use.”

The message for retailers is loud and clear: prove your worth. People are happy to use AI when it actually helps them. Over half of shoppers agree that AI makes shopping easier (55%) and faster (53%), using it to find products, compare prices, or come up with gift ideas. The interest in helpful AI is there, but it has to come with a promise of transparency and privacy.

Some brands are getting this right by focusing on people, not just the technology. Sterling Doak, Head of Marketing at iconic guitar maker Gibson, says it’s about thinking differently.

“If I can find a utility [AI] that can help my staff think more strategically and creatively, that’s needed because we’re a very creative business at the core,” Doak explains. For Gibson, AI serves human creativity rather than just automating tasks.

It’s a similar story for Australian retailer City Beach, which used AI marketing to keep its customers coming back. Mike Cheng, the company’s Head of Digital, discovered AI was the ideal tool for spotting and winning back customers who were about to leave.

“AI was able to predict where people were churning or defecting at a 1:1 level, and this allowed us to send campaigns based on customers’ individual lifecycle,” Cheng notes. Their approach brought back 48 percent of those customers within three months.

What these success stories have in common is a focus on solving real problems for people. As retailers venture deeper into what SAP Emarsys calls the “Engagement Era,” the way forward is becoming clearer. Investment in AI isn’t slowing down—64 percent of marketers are planning to increase their spend next year.

The technology isn’t the problem; it’s how it’s being used. Retailers need to close the gap between what they’re doing and what their customers are feeling. That means going beyond basic personalisation to offer real value, being open about how data is used, and proving that sharing information leads to a better experience.

The AI revolution is here, but for it to truly succeed, marketing professionals need to remember the person on the other side of the screen.

See also: Google Vids gets AI avatars and image-to-video tools

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is part of TechEx and is co-located with other leading technology events, click here for more information.

AI News is powered by TechForge Media. Explore other upcoming enterprise technology events and webinars here.

Fintech1 month ago

Fintech1 month agoOpenAI and UK Government Partner on AI Infrastructure and Deployment

Latest Tech News1 month ago

Latest Tech News1 month agoTrump wanted to break up Nvidia — but then its CEO won him over

Cyber Security1 month ago

Cyber Security1 month agoMicrosoft Fix Targets Attacks on SharePoint Zero-Day – Krebs on Security

Artificial Intelligence2 months ago

Artificial Intelligence2 months agoApple loses key AI leader to Meta

Latest Tech News1 month ago

Latest Tech News1 month agoThe tech that the US Post Office gave us

Cyber Security1 month ago

Cyber Security1 month agoPhishers Target Aviation Execs to Scam Customers – Krebs on Security

Latest Tech News1 month ago

Latest Tech News1 month agoGPD’s monster Strix Halo handheld requires a battery ‘backpack’ or a 180W charger

Artificial Intelligence1 month ago

Artificial Intelligence1 month agoAnthropic deploys AI agents to audit models for safety